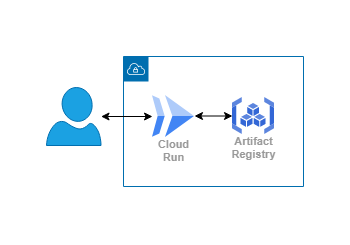

System Outline

When doing data analytics often end users will want to be able to dig into the data or try out multiple different scenarios. The answer to this is to give them a dashboard that allows them to explore and experiment with the data. There are many tools for this, but most are property and require servers or licenses. R and the Shiny package are open source and can provide a very robust interface. The problem is that sharing the applications requires the users to have R and Shiny installed and be proficient or to pay for hosting that you can direct them to. Here we can package our applications with all the necessary dependencies and data in a container and run it on GCP with Cloud Run. We store the image in the Artifact Registry so that we will always have access and control over it.