System Outline

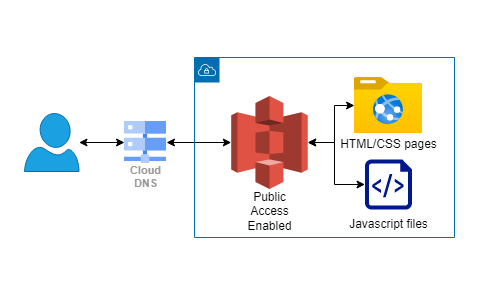

Here I am using the static website hosting option for Simple Storage Service (S3). I already had a domain registered with Google and added a permanent forwarding record to the URL generated for the S3 bucket. Inside of the bucket I have the HTML, CSS, JS, and Image files shows on this page.